3.

Training the Image Classifier

Written by Audrey Tam & Matthijs Hollemans

Update Note: This chapter has been updated to iOS 13, Xcode 11 and Swift 5.1

In the previous chapter, you saw how to use a trained model to classify images with Core ML and Vision. However, using other people’s models is often not sufficient — the models may not do exactly what you want or you may want to use your own data and categories — and so it’s good to know how to train your own models.

In the chapters that follow, you’ll learn to create your own models. You’ll learn how to use common tools and libraries used by machine learning experts to create models. Apple provides developers with Create ML as a machine learning framework to create models in Xcode. In this chapter, you’ll learn how to train the snacks model using Create ML.

The dataset

Before you can train a model, you need data. You may recall from the introduction that machine learning is all about training a model to learn “rules” by looking at a lot of examples.

Since you’re building an image classifier, it makes sense that the examples are going to be images. You’re going to train an image classifier that can tell apart 20 different types of snacks.

Here are the possible categories, again:

Healthy: apple, banana, carrot, grape, juice, orange,

pineapple, salad, strawberry, watermelon

Unhealthy: cake, candy, cookie, doughnut, hot dog,

ice cream, muffin, popcorn, pretzel, waffle

Double-click starter/snacks-download-link.webloc to download and unzip the snacks dataset in your default download location, then move the snacks folder into the dataset folder. It contains the images on which you’ll train the model.

This dataset has almost 7,000 images — roughly 350 images for each of these categories.

The dataset is split into three folders: train, test and val. For training the model, you will use only the 4,800 images from the train folder, known as the training set.

The images from the val and test folders (950 each) are used to measure how well the model works once it has been trained. These are known as the validation set and the test set, respectively. It’s important that you don’t use images from the validation set or test set for training; otherwise, you won’t be able to get a reliable estimate of the quality of your model. We’ll talk more about this later in the chapter.

Here are a few examples of training images:

As you can see, the images come in all kinds of shapes and sizes. The name of the folder will be used as the class name — also called the label or the target.

Create ML

You will now use Create ML to train a multi-class classifier on the snacks dataset.

In the Xcode menu, select Xcode ▸ Open Developer Tool ▸ Create ML:

A Finder window opens: click New Document. In the Choose a Template window, select Image ▸ Image Classifier, and click Next:

Name your classifier MultiSnacks, and click Next:

Choose a place to save your image classifier, then click Create to display your classifier window:

The first step is to add Training Data: click Choose ▸ Select Files… then navigate to snacks/train, and click Open:

The window updates to show the number of classes and training data items, and the status shows Ready to train. Click the Train button:

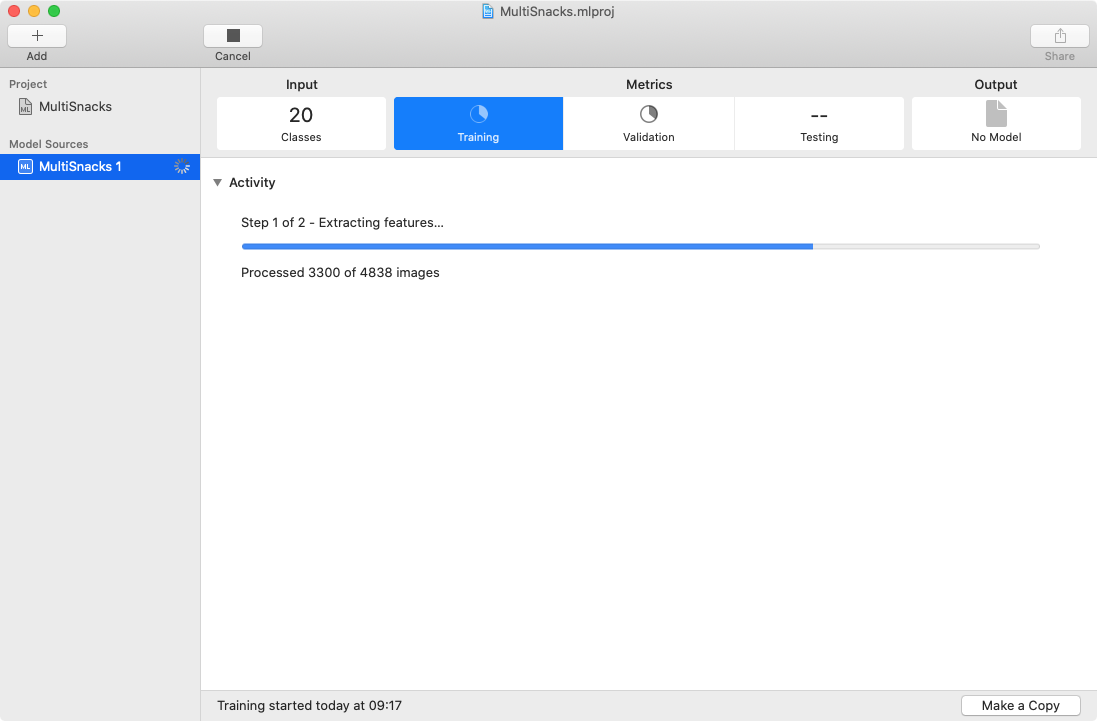

The window shows Activity: Step 1 is to extract features, and there’s a progress bar to show how many images have been processed:

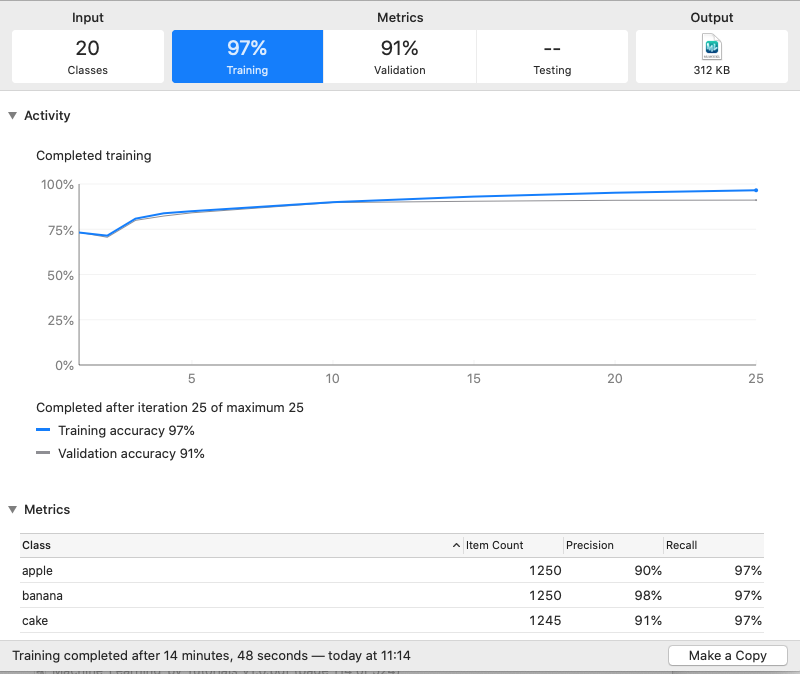

In Step 2 — training — a graph appears, displaying Training accuracy, Validation accuracy for each iteration. And when training is complete, you get a Metrics table listing Precision and Recall for the 20 classes:

Note: You may be wondering how Create ML can load all of the training data in memory, since 4,800 images may actually take up more physical memory than you have RAM. Create ML loads data into

MLDataTablestructures, which keep only the image metadata in memory, then loads the images themselves on demand. This means that you can have very largeMLDataTables that contain a lot of data.

Precision and recall

Create ML computes precision and recall for each class, which is useful for understanding which classes perform better than others. In my results, these values are mostly 100% or very close. But what do they mean?

Precision means: Of all the images that the model predicts to be “apple”, 98% of them are actually apples. Precision is high if we don’t have many false positives, and it is low if we often misclassify something as being “apple.” A false positive happens if something isn’t actually from class “X”, but the model mistakenly thinks it is.

Recall is similar, but different: It counts how many apples the model found among the total number of apples — in this case, it found 100% of the “apple” images. This gives us an idea of the number of false negatives. If recall is low, it means we actually missed a lot of the apples. A false negative happens if something really is of class “X”, but the model thinks it’s a different class.

Again, we like to see high numbers here, and > 95% is fantastic. For example, the “apple” class precision of 98%, which means that about one out of 50 things the model claimed were apple really aren’t. For recall, it means that the model found all of the carrot images in the test set. That’s awesome!

For precision, the worst performing classes are “apple” (98%) and “cake” (98%). For recall, the worst is “ice cream” (98%). These would be the classes to pay attention to, in order to improve the model — for example, by gathering more or better training images for these classes.

Your accuracy values and metrics might be different from what I got, because there’s some randomness in how the model is trained and validated. Continue reading, to learn about dataset curation and transfer learning.

How we created the dataset

Collecting good training data can be very time consuming! It’s often considered to be the most expensive part of machine learning. Despite — or because of — the wealth of data available on the internet, you’ll often need to curate your dataset: You must manually go through the data items to remove or clean up bad data or to correct classification errors.

The images in this dataset are from the Google Open Images Dataset, which contains more than 9 million images organized into thousands of categories. The Open Images project doesn’t actually host the images — only the URLs to these images. Most of the images come from Flickr and are all licensed under a Creative Commons license (CC BY 2.0). You can find the Open Images Dataset at storage.googleapis.com/openimages/web/index.html

To create the snacks dataset, we first looked through the thousands of classes in Open Images and picked 20 categories of healthy and unhealthy snacks. We then downloaded the annotations for all the images. The annotations contain metadata about the images, such as what their class is. Since, in Open Images, the images can contain multiple objects, they may also have multiple classes.

We randomly grabbed a subset of image URLs for each of our 20 chosen classes, and we then downloaded these images using a Python script. Quite a few of the images were no longer available or just not very good, while some were even mislabeled as being from the wrong category, so we went through the downloaded images by hand to clean them up.

Here are some of the things we had to do to clean up the data:

- The pictures in Open Images often contain more than one object, such as an apple and a banana, and we can’t use these to train the classifier because the classifier expects only a single label per image. The image must have either an apple or a banana, not both; otherwise, it will just confuse the learning algorithm. We only kept images with just one main object.

- Sometimes, the lines between categories are a little blurry. Many downloaded images from the cake category were of cupcakes, which are very similar to muffins, so we removed these ambiguous images from the dataset. For our purposes, we decided that cupcakes belong to the muffins category, not the cake category.

- We made sure the selected images were meaningful for the problem you’re trying to solve. The snacks classifier was intended to work on food items you’d typically find in a home or office. But the banana category had a lot of images of banana trees with stacks of green bananas — that’s not the kind of banana we wanted the classifier to recognize.

- Likewise, we included a variety of images. We did not only want to include “perfect” pictures that look like they could be found in cookbooks, but photos with a variety of backgrounds, lighting conditions and humans in them (since it’s likely that you’ll use the app in your home or office, and the pictures you take will have people in them).

- We threw out images in which the object of interest was very small, since the neural network resizes the images to 299x299 pixels, a very small object would be just a few pixels in size — too small for the model to learn anything from.

The process of downloading images and curating them was repeated several times, until we had a dataset that gave good results. Simply by improving the data, the accuracy of the model also improved.

When training an image classifier, more images is better. However, we limited the dataset to about 350 images per category to keep the download small and training times short so that using this dataset would be accessible to all our readers. Popular datasets such as ImageNet have 1,000 or more images per category, but they are also hundreds of gigabytes.

The final dataset has 350 images per category, which are split into 250 training images, 50 validation images and 50 test images. Some categories, such as pretzel and popcorn, have fewer images because there simply weren’t more suitable ones available in Open Images.

It’s not necessary to have exactly the same number of images in each category, but the difference also should not be too great, or the model will be tempted to learn more about one class than another. Such a dataset is called imbalanced and you need special techniques to deal with them, which we aren’t going to cover, here.

All images were resized so that the smallest side is 256 pixels. This isn’t necessary, but it does make the download smaller and training a bit faster. We also stripped the EXIF metadata from images because some of the Python image loaders give warnings on those images, and that’s just annoying. The downside of removing this metadata is that EXIF contains orientation info for the images, so some images may actually appear upside down… Oh well.

Creating the dataset took quite a while, but it’s vital. If your dataset is not high quality, then the model won’t be able to learn what you want it to learn. As they say, it’s not who has the best algorithms, but who has the best data.

Note: You are free to use the images in the snacks dataset as long as you stick to the rules of the CC BY 2.0 license. The filenames have the same IDs as used in the Google Open Images annotations. You can use the included

credits.csvfile to look up the original URLs of where the images came from.

Transfer learning

So what’s happening in the playground? Create ML is currently busy training your model using transfer learning. As you may recall from the first chapter, transfer learning is a clever way to quickly train models by reusing knowledge from another model that was originally trained on a different task.

The HealthySnacks and MultiSnacks models you used in the previous chapter were built on top of something called SqueezeNet. The underlying model used by Create ML is not SqueezeNet but VisionFeaturePrint_Screen, a model that was designed by Apple specifically for getting high-quality results on iOS devices.

VisionFeaturePrint_Screen was pre-trained on a ginormous dataset to recognize a huge number of classes. It did this by learning what features to look for in an image and by learning how to combine these features to classify the image. Almost all of the training time for your dataset, is the time the model spends extracting 2,048 features from your images. These include low-level edges, mid-level shapes and task-specific high-level features.

Once the features have been extracted, Create ML spends only a relatively tiny amount of time training a logistic regression model to separate your images into 20 classes. It’s similar to fitting a straight line to scattered points, but in 2,048 dimensions instead of two.

Transfer learning only works successfully when features of your dataset are reasonably similar to features of the dataset that was used to train the model. A model pre-trained on ImageNet — a large collection of photos — might not transfer well to pencil drawings or microscopy images.

A closer look at the training loop

Transfer learning takes less time than training a neural network from scratch. However, before we can clearly understand how transfer learning works, we have to gain a little insight into what it means to train a neural network first. It’s worth recalling an image we presented in the first chapter:

In words, this how a neural network is trained:

-

Initialize the neural network’s “brain” with small random numbers. This is why an untrained model just makes random guesses: its knowledge literally is random. These numbers are known as the weights or learned parameters of the model. Training is the process of changing these weights from random numbers into something meaningful.

-

Let the neural network make predictions for all the training examples. For an image classifier, the training examples are images.

-

Compare the predictions to the expected answers. When you’re training a classifier, the expected answers — commonly referred to as the “targets” — are the class labels for the training images. The targets are used to compute the “error” or loss, a measure of how far off the predictions are from the expected answers. The loss is a multi-dimensional function that has a minimum value for some particular configuration of the weights, and the goal of training is to determine the best possible values for the weights that get the loss very close to this minimum. On an untrained model, where the weights are random, the loss is high. The lower this loss value, the more the model has learned.

-

To improve the weights and reduce the error, you calculate the gradient of the loss function. This gradient tells you how much each weight contributed to the loss. Using this gradient, you can correct the weights so that next time the loss is a little lower. This correction step is called gradient descent, and happens many times during a training session. This nudges the model’s knowledge a little bit in the right direction so that, next time, it will make slightly more correct predictions for the training images.

For large datasets, using all the training data to calculate the gradient takes a long time. Stochastic gradient descent (SGD) estimates the gradient from randomly selected mini-batches of training data. This is like taking a survey of voters ahead of election day: If your sample is representative of the whole dataset, then the survey results accurately predict the final results.

-

Go to step two to repeat this process hundreds of times for all the images in the training set. With each training step, the model becomes a tiny bit better: The brain’s learned parameters change from random numbers into something that is more suitable to the task that you want it to learn. Over time, the model learns to make better and better predictions.

Stochastic gradient descent is a rather brute-force approach, but it’s the only method that is practical for training deep neural networks. Unfortunately, it’s also rather slow. To make SGD work reliably, you can only make small adjustments at a time to the learned parameters, so it takes a lot of training steps (hundreds of thousands or more) to make the model learn anything.

You’ve seen that an untrained model is initialized with random weights. In order for such a model to learn the desired task, such as image classification, it requires a lot of training data. Image classifiers are often trained on datasets of thousands of images per class. If you have too few images, the model won’t be able to learn anything. Machine learning is very data hungry!

Those are the two big downsides of training a deep learning model from scratch: You need a lot of data and it’s slow.

Create ML uses a smarter approach. Instead of starting with an untrained model that has only random numbers in its brain, Create ML takes a neural network that has already been successfully trained on a large dataset, and then it fine-tunes it on your own data. This involves training only a small portion of the model instead of the whole thing. This shortcut is called transfer learning because you’re transferring the knowledge that the neural network has learned on some other type of problem to your specific task. It’s a lot faster than training a model from scratch, and it can work just as well. It’s the machine learning equivalent of “work smarter, not harder!”

The pre-trained VisionFeaturePrint_Screen model that Create ML uses has seen lots of photos of food and drinks, so it already has a lot of knowledge about the kinds of images that you’ll be using it with. Using transfer learning, you can take advantage of this existing knowledge.

Note: When you use transfer learning, you need to choose a base model that you’ll use for feature extraction. The two base models that you’ve seen so far are SqueezeNet and

VisionFeaturePrint_Screen. Turi Create analyzes your training data to select the most suitable base model. Currently, Create ML’s image classifier always uses theVisionFeaturePrint_Screenbase model. It’s large, with 2,048 features, so the feature extraction process takes a while. The good news is thatVisionFeaturePrint_Screenis part of iOS 12 and the Vision framework, so models built on this are tiny — kilobytes instead of megabytes, because they do not need to include the base model. The bad news is that models trained with Create ML will not work on iOS 11, or on other platforms such as Android.

Since this pre-trained base model doesn’t yet know about our 20 specific types of snacks, you cannot plug it directly into the snack detector app, but you can use it as a feature extractor.

What is feature extraction?

You may recall that machine learning happens on “features,” where we’ve defined a feature to be any kind of data item that we find interesting. You could use the photo’s pixels as features but, as the previous chapter demonstrated, the individual RGB values don’t say much about what sort of objects are in the image.

VisionFeaturePrint_Screen transforms the pixel features, which are hard to understand, into features that are much more descriptive of the objects in the images.

This is the pipeline that we’ve talked about before. Here, however, the output of VisionFeaturePrint_Screen is not a probability distribution that says how likely it is that the image contains an object of each class.

VisionFeaturePrint_Screen’s output is, well, more features.

For each input image, VisionFeaturePrint_Screen produces a list of 2,048 numbers. These numbers represent the content of the image at a high level. Exactly what these numbers mean isn’t always easy to describe in words, but think of each image as now being a point in this new 2,048-dimensional space, wherein images with similar properties are grouped together.

For example, one of those 2,048 numbers could represent the color of a snack, and oranges and carrots would have very similar values in that dimension. Another feature could represent how elongated an object is, and bananas, carrots and hot dogs would have larger values than oranges and apples.

On the other hand, apples, oranges and doughnuts would score higher in the dimension for how “round” the snack is, while waffles would score lower in that dimension (assuming a negative value for that feature means squareness instead of roundness).

Models usually aren’t that interpretable, though, but you get the idea: These new 2,048 features describe the objects in the images by their true characteristics, which are much more informative than pixel intensities. However, you cannot simply draw a line (sorry, a hyperplane) through this 2,048-dimensional space to separate the images into the different classes we’re looking for, because VisionFeaturePrint_Screen is not trained on our own dataset. It was trained on ImageNet, which has 1000 classes, not 20.

While VisionFeaturePrint_Screen does a good job of creating more useful features from the training images, in order to be able to classify these images we need to transform the data one more time into the 20-dimensional space that we can interpret as the probability distribution over our 20 types of snacks.

How do we do that? Well, Create ML uses these 2,048 numbers as the input to a new machine learning model called logistic regression. Instead of training a big, hairy model on images that have 150,000 features (difficult!), Create ML just trains a much simpler model on top of the 2,048 features that VisionFeaturePrint_Screen has extracted.

Logistic regression

By the time you’re done reading the previous section, Create ML has (hopefully) finished training your model. The status shows training took 2 minutes, 47 seconds — most of that time was spent on extracting features.

Note: I’m running Create ML on a 2018 MacBook Pro with a 6-core 2.7GHz CPU and Radeon 560 GPU. You’ll probably get slightly different results than this. Untrained models, in this case the logistic regression, are initialized with random numbers, which can cause variations between different training runs.

The solver that Create ML trains is a classifier called logistic regression. This is an old-school machine learning algorithm but it’s still extremely useful. It’s arguably the most common ML model in use today.

You may be familiar with another type of regression called linear regression. This is the act of fitting a line through points on a graph, something you may have done in high school where it was likely called the method of (ordinary) least squares.

Logistic regression does the same thing, but says: All points on one side of the line belong to class A, and all points on the other side of the line belong to class B. See how we’re literally drawing a line through space to separate it into two classes?

Because books cannot have 2,048-dimensional illustrations, the logistic regression in the above illustration is necessarily two-dimensional, but the algorithm works the same way, regardless of how many dimensions the input data has. Instead of a line, the decision boundary is really a high-dimensional object that we’ve mentioned before: a hyperplane. But because humans have trouble thinking in more than two or three dimensions, we prefer to visualize it with a straight line.

Create ML actually uses a small variation of the algorithm — multinomial logistic regression — that handles more than two classes.

Training the logistic regression algorithm to transform the 2,048-dimensional features into a space that allows us to draw separating lines between the 20 classes is fairly easy because we already start with features that say something meaningful about the image, rather than raw pixel values.

Note: If you’re wondering about exactly how logistic regression works, as usual it involves a transformation of some kind. The logistic regression model tries to find “coefficients” (its learned parameters) for the line so that a point that belongs to class A is transformed into a (large) negative value, and a point from class B is transformed into a (large) positive value. Whether a transformed point is positive or negative then determines its class.

The more ambiguous a point is, i.e. the closer the point is to the decision boundary, the closer its transformed value is to 0. The multinomial version extends this to allow for more than two classes. We’ll go into the math in a later chapter. For now, it suffices to understand that this algorithm finds a straight line / hyperplane that separates the points belonging to different classes in the best way possible.

Looking for validation

Even though there are 4,838 images in the snacks/train dataset, Create ML uses only 95% of them for training.

During training, it’s useful to periodically check how well the model is doing. For this purpose, Create ML sets aside a small portion of the training examples — 5%, so about 240 images. It doesn’t train the logistic classifier on these images, but only uses them to evaluate how well the model does (and possibly to tune certain settings of the logistic regression).

This is why the output has one graph for training accuracy and one for validation accuracy:

After 12 iterations, validation accuracy levels off at 94%, meaning that out of 100 training examples it correctly predicts the class for 94 of them. But training accuracy keeps increasing to 100% — the classifier is correct for 10 out of 10 images.

Note: What Create ML calls an iteration is one pass through the entire training set, also known as an “epoch”. This means Create ML has given the model a chance to look at all 4,582 training images once (or rather, the extracted feature vectors of all training images). If you do 10 iterations, the model will have seen each training image (or its feature vectors) 10 times.

By default, Create ML trains the logistic regression for up to 25 iterations, but you can change this with the Maximum Iterations parameter. In general, the more iterations, the better the model, but also the longer you have to wait for training to finish. But training the logistic regression doesn’t take long, so no problem!

The problem with this is doing the feature extraction all over again! If your Mac did the feature extraction in five minutes or less, go ahead and see what happens if you reduce the number of iterations:

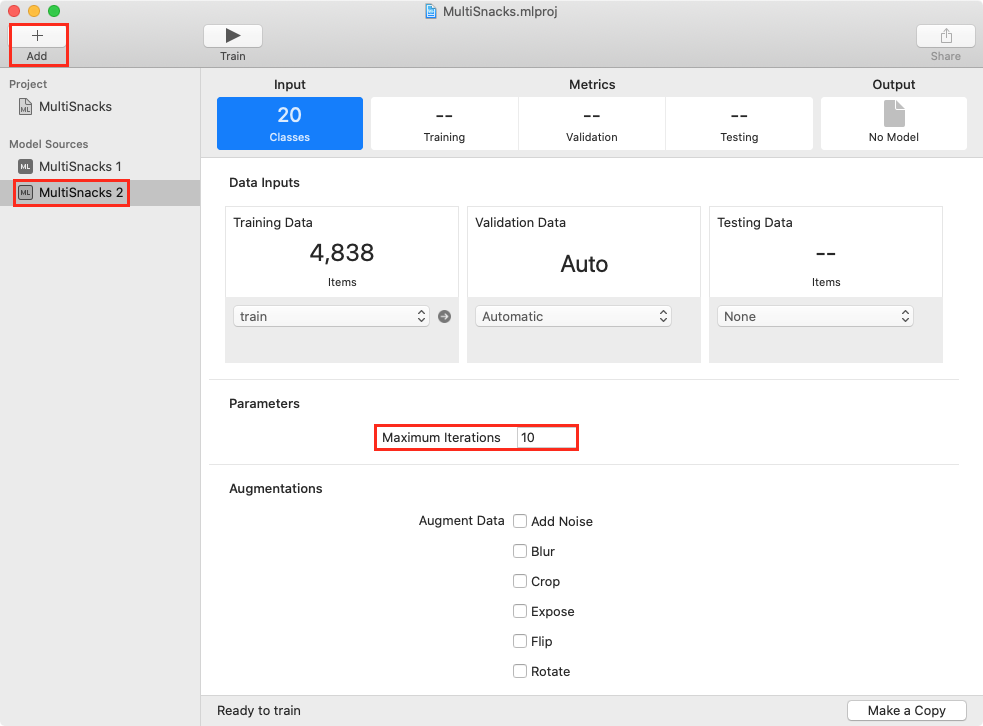

- Click the + button to add a new Model Source: you’re back to the start window.

- In the Training Data Choose menu, select train. Notice the Automatic setting for Validation Data. Soon, you’ll use snacks/val for this instead.

- Decrease Max iterations to 10.

- Click Train.

If you stick around to watch, you’ll see how amost all the training time is used for extracting features. In Chapter 5, “Digging Deeper into Turi Create”, you’ll learn how to save extracted features, so you can try different training parameters without having to wait.

Here’s what happened on my Mac: With 10 iterations, training completed after 2 minutes, 38 seconds — only 9 seconds less than 25 iterations! But accuracy values are much lower:

Training accuracy (92%) decreased more than validation accuracy (89%), but the two values are closer (3%).

Now see what happens when you use the snacks/val subdirectory, which has about 50 images per class, instead of letting Create ML choose 5% at random.

Add a third Model Source, and rename it Multisnacks manual val. This is a good time to rename the other two to Multisnacks 25 iter and Multisnacks 10 iter.

In Multisnacks manual val, choose train for Training Data, then select snacks/val for Validation Data:

Leave Max iterations at 25, and click Train.

Here’s what I got:

This is with 955 validation images, not 240. And this time the training used all 4,838 training images. But validation accuracy actually got worse, indicating the model may be overfitting.

Overfitting happens

Overfitting is a term you hear a lot in machine learning. It means that the model has started to remember specific training images. For example, the image train/ice cream/b0fff2ec6c49c718.jpg has a person in a blue shirt enjoying a sundae:

Suppose a classifier learns a rule that says, “If there is a big blue blob in the image, then the class is ice cream.” That’s obviously not what you want the model to learn, as the blue shirt does not have anything to do with ice cream in general. It just happens to work for this particular training image.

This is why you use a validation set of images that are not used for training. Since the model has never seen these images before, it hasn’t learned anything about them, making this is a good test of how well the trained model can generalize to new images.

So the true accuracy of this particular model is 92% correct (the validation accuracy), not 98% (the training accuracy). If you only look at the training accuracy, your estimate of the model’s accuracy can be too optimistic. Ideally, you want the validation accuracy to be similar to the training accuracy, as it was after only 10 iterations — that means that your model is doing a good job.

Typically, the validation accuracy is a bit lower, but if the gap between them is too big, it means the model has been learning too many irrelevant details from the training set — in effect, memorizing what the result should be for specific training images. Overfitting is one of the most prevalent issues with training deep learning models.

There are several ways to deal with overfitting. The best strategy is to train with more data. Unfortunately, for this book we can’t really make you download a 100GB dataset, and so we’ve decided to make do with a relatively small dataset. For image classifiers, you can augment your image data by flipping, rotating, shearing or changing the exposure of images. Here’s an illustration of data augmentation:

So try it: add a new Model Source, name it Multisnacks crop, set the options as before, but also select some data augmentation:

The augmentation options appear in alphabetical order, but the pre-Xcode-11 options window lists them from greatest to least training effectiveness, with Crop as the most effective choice:

This is also the order in which the classifier applies options, if you select more than one. Selecting Crop creates four flipped images for each original image, so feature extraction takes almost five times longer (14m 48s for me). Selecting all six augmentation options creates 100 augmented images for each original! Actually, “only 100,” because the number of augmentation images is capped at 100.

Click Train, then go brew a pot of tea or bake some cookies.

Back again? Here’s what I got:

Well, training accuracy is a little lower (97%), but so is validation accuracy (91%), by the same amount. So it’s still overfitting, but it’s also taking longer to learn — that’s understandable, with almost 25,000 images!

Another trick is adding regularization to the model — something that penalizes large weights, because a model that gives a lot of weight to a few features is more likely to be overfitting. Create ML doesn’t let you do regularization, so you’ll learn more about it in Chapter 5, “Digging Deeper into Turi Create.”

The takeaway is that training accuracy is a useful metric, but it only says something about whether the model is still learning new things, not about how well the model works in practice. A good score on the training images isn’t really that interesting, since you already know what their classes are, after all.

What you care about is how well the model works on images that it has never seen before. Therefore, the metric to keep your eye on is the validation accuracy, as this is a good indicator of the performance of the model in the real world.

Note: By the way, overfitting isn’t the only reason why the validation accuracy can be lower than the training accuracy. If your training images are different from your validation images in some fundamental way (silly example: all your training images are photos taken at night while the validation images are taken during the day), then your model obviously won’t get a good validation score. It doesn’t really matter where your validation images come from, as long as these images were not used for training but they do contain the same kinds of objects. This is why Create ML randomly picks 5% of the training images to use for validation. You’ll take a closer look at overfitting and the difference between the training and validation accuracies in the next chapters.

More metrics and the test set

Now that you’ve trained the model, it’s good to know how well it does on new images that it has never seen before. You already got a little taste of that from the validation accuracy during training, but the dataset also comes with a collection of test images that you haven’t used yet. These are stored in the snacks/test folder, and are organized by class name, just like the training data.

Switch to the Model Source with the highest Validation accuracy — in my case, it’s the first 25 iter one, with 94% validation accuracy.

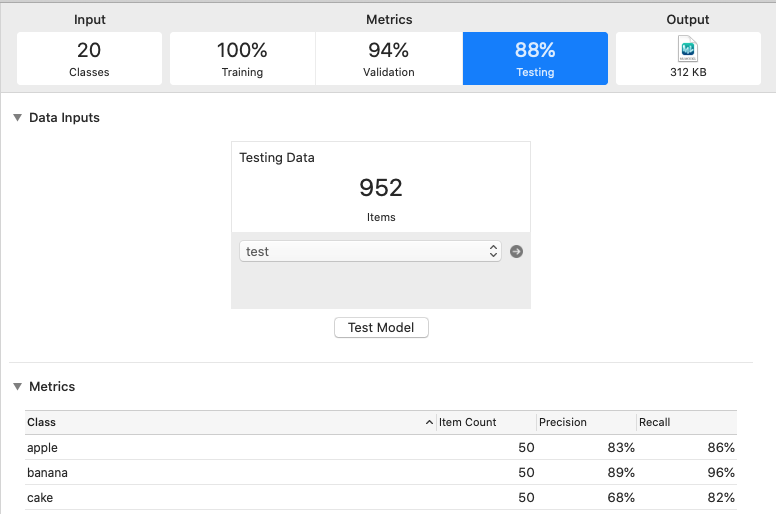

Select the Testing tab, then select snacks/test:

Click Test Model: This takes a few moments to compute. Just like during training, the feature extraction on the images takes more time than classification.

88% accuracy! Lower than validation accuracy :[.

Note: You may be wondering what the difference is between the validation set that’s used during training and the test set you’re using now. They are both used to find out how well the trained model does on new images.

However, the validation set is often also used to tune the settings of the learning algorithm — the so-called hyperparameters. Because of this, the model does get influenced by the images in the validation set, even though the training process never looks at these images directly. So while the validation score gives a better idea of the true performance of the model than the training accuracy, it is still not completely impartial.

That’s why it’s a good idea to reserve a separate test set. Ideally, you’d evaluate your model only once on this test set, when you’re completely done training it. You should resist the temptation to go back and tweak your model to improve the test set performance. You don’t want the test set images to influence how the model is trained, making the test set no longer a good representation of unseen images. It’s probably fine if you do this a few times, especially if your model isn’t very good yet, but it shouldn’t become a habit. Save the test set for last and evaluate on it as few times as possible.

Again, the question: Is 88% correct a good score or a bad score? It means more than one out of 10 images is scored wrong. Obviously, we’d rather see an accuracy score of 99% (only one out of 100 images wrong) or better, but whether that’s feasible depends on the capacity of your model, the number of classes and how many training images you have.

Even if 88% correct is not ideal, it does mean your model has actually learned quite a bit. After all, with 20 classes, a totally random guess would only be correct one out of 20 times or 5% on average. So the model is already doing much better than random guesses. But it looks like Create ML isn’t going to do any better than this with the current dataset.

Keep in mind, the accuracy score only looks at the top predicted class with the highest probability. If a picture contains an apple, and the most confident prediction is “hot dog,” then it’s obviously a wrong answer. But if the top prediction is “hot dog” with 40% confidence, while the second highest class is “apple” with 39% confidence, then you might still consider this a correct prediction.

Examining Your Output Model

It’s time to look at your actual Core ML model: select the Output tab, then drag the snacks/test folder onto where it says Drag or Add Files. Quick as a flash, your model classifies the test images. You can inspect each one to see the predicted class(es) and the model’s confidence level:

It’s surprising how your model gets it right for some of the peculiar images:

The whole point of training your own models is to use them in your apps, so how do you save this new model so you can load it with Core ML?

Just drag it from the Create ML app to your desktop or anywhere in Finder — so easy! Then add it to Xcode in the usual way. Simply replace the existing .mlmodel file with your new one. The model that’s currently in the Snacks app was created with SqueezeNet as the feature extractor — it’s 5MB. Your new model from Create ML is only 312KB! It’s actually a much bigger model, but most of it — the VisionFeaturePrint_Screen feature extractor — is already included in the operating system, and so you don’t need to distribute that as part of the .mlmodel file.

A Core ML model normally combines the feature extractor with the logistic regression classifier into a single model. You still need the feature extractor because, for any new images you want to classify, you also need to compute their feature vectors. With Core ML, the logistic regression is simply another layer in the neural network, i.e., another stage in the pipeline.

However, a VisionFeaturePrint_Screen-based Core ML model doesn’t need to include the feature extractor, because it’s part of iOS. So the Core ML model is basically just the logistic regression classifier, and quite small!

Note: There are a few other differences between these two feature extractors. SqueezeNet is a relatively small pre-trained model that expects 227×227 images, and extracts 1,000 features.

VisionFeaturePrint_Screenuses 299×299 images, and extracts 2,048 features. So the kind of knowledge that is extracted from the image by the Vision model is much richer, which is why the model you just trained with Create ML actually performs better than the SqueezeNet-based model from the previous chapter, which only has a 67% validation accuracy!

Classifying on live video

The example project in this chapter’s resources is a little different than the app you worked with in the previous chapter. It works on live video from the camera. The VideoCapture class uses AVCaptureSession to read video frames from the iPhone’s camera at 30 frames per second. The ViewController acts as the delegate for this VideoCapture class and is called with a CVPixelBuffer object 30 times per second. It uses Vision to make a prediction and then shows this on the screen in a label.

The code is mostly the same as in the previous app, except now there’s no longer a UIImagePicker but the app runs the classifier continuously.

There is also an FPSCounter object that counts how many frames per second the app achieves. With a model that uses VisionFeaturePrint_Screen as the feature extractor you should be able to get 30 FPS on a modern device.

Note: The app has a setting for

videoCapture.frameIntervalthat lets you run the classifier less often, in order to save battery power. Experiment with this setting, and watch the energy usage screen in Xcode for the difference this makes.

The VideoCapture class is just a bare bones example of how to read video from the camera. We kept it simple on purpose so as not to make the example app too complicated. For real-word production usage you’ll need to make this more robust, so it can handle interruptions, and use more camera features such as auto-focus, the front-facing camera, and so on.

Recap

In this chapter, you got a taste of training your own Core ML model with Create ML. Partly due to the limited dataset, the default settings got only about 90% accuracy. Increasing max iterations increased training accuracy, but validation accuracy was stuck at ~90%, indicating that overfitting might be happening. Augmenting the data with flipped images reduced the gap between training and validation accuracies, but you’ll need more iterations to increase the accuracies.

More images is better. We use 4,800 images, but 48,000 would have been better, and 4.8 million would have been even better. However, there is a real cost associated with finding and annotating training images, and for most projects a few hundred images or at most a few thousand images per class may be all you can afford. Use what you’ve got — you can always retrain the model at a later date once you’ve collected more training data. Data is king in machine learning, and who has the most of it usually ends up with a better model.

Create ML is super easy to use, but lets you tweak only a few aspects of the training process. It’s also currently limited to image and text classification models.

Turi Create gives you more task-focused models to customize, and lets you get more hands-on with the training process. It’s almost as easy to use as Create ML, but you need to write Python. The next chapter gets you started with some useful tools, so you can train a model with Turi Create. Then, in Chapter 5, “Digging Deeper into Turi Create,” you’ll get a closer look at the training process, and learn more about the building blocks of neural networks.

Challenge

Create your own dataset of labelled images, and use Create ML to train a model.

A fun dataset is the Kaggle dogs vs. cats competition www.kaggle.com/c/dogs-vs-cats, which lets you train a binary classifier that can tell apart dogs from cats. The best models score about 98% accuracy — how close can you get with Create ML?

Also check out some of Kaggle’s other image datasets:

https://www.kaggle.com/datasets?sortBy=relevance&group=featured&search=image

Of course, don’t forget to put your own model into the iOS app to impress your friends and co-workers!

Key points

- You can use macOS playgrounds to test out Create ML, and play with the different settings, to create simple machine learning models.

- Create ML allows you to create small models that leverage the built-in Vision feature extractor already installed on iOS 12+ devices.

- Ideally, you want the validation accuracy to be similar to the training accuracy.

- There are several ways to deal with overfitting: include more images, increase training iterations, or augment your data.

- Precision and recall are useful metrics when evaluating your model.