Augmented Reality in Android with Google’s Face API

You’ll build a Snapchat Lens-like app called FaceSpotter which draws cartoony features over faces in a camera feed using augmented reality. By Joey deVilla.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Augmented Reality in Android with Google’s Face API

30 mins

Classifications

The Face class provides classifications through these methods:

- getIsLeftEyeOpenProbability and getIsRightEyeOpenProbability: The probability that the specified eye is open or closed, and

- getIsSmilingProbability: The probability that the face is smiling.

Both return floats with a range of 0.0 (highly unlikely) to 1.0 (bet everything on it). You’ll use the results from these methods to determine whether an eye is open and whether a face is smiling and pass that information along to FaceGraphic.

Modify FaceTracker to make use of classifications. First, add two new instance variables to the FaceTracker class to keep track of the previous eye states. As with landmarks, when subjects move around quickly, the detector may fail to determine eye states, and that’s when having the previous state comes in handy:

private boolean mPreviousIsLeftEyeOpen = true;

private boolean mPreviousIsRightEyeOpen = true;

onUpdate also needs to be updated as follows:

@Override

public void onUpdate(FaceDetector.Detections<Face> detectionResults, Face face) {

mOverlay.add(mFaceGraphic);

updatePreviousLandmarkPositions(face);

// Get face dimensions.

mFaceData.setPosition(face.getPosition());

mFaceData.setWidth(face.getWidth());

mFaceData.setHeight(face.getHeight());

// Get the positions of facial landmarks.

mFaceData.setLeftEyePosition(getLandmarkPosition(face, Landmark.LEFT_EYE));

mFaceData.setRightEyePosition(getLandmarkPosition(face, Landmark.RIGHT_EYE));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.LEFT_CHEEK));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.RIGHT_CHEEK));

mFaceData.setNoseBasePosition(getLandmarkPosition(face, Landmark.NOSE_BASE));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.LEFT_EAR));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.LEFT_EAR_TIP));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.RIGHT_EAR));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.RIGHT_EAR_TIP));

mFaceData.setMouthLeftPosition(getLandmarkPosition(face, Landmark.LEFT_MOUTH));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.BOTTOM_MOUTH));

mFaceData.setMouthRightPosition(getLandmarkPosition(face, Landmark.RIGHT_MOUTH));

// 1

final float EYE_CLOSED_THRESHOLD = 0.4f;

float leftOpenScore = face.getIsLeftEyeOpenProbability();

if (leftOpenScore == Face.UNCOMPUTED_PROBABILITY) {

mFaceData.setLeftEyeOpen(mPreviousIsLeftEyeOpen);

} else {

mFaceData.setLeftEyeOpen(leftOpenScore > EYE_CLOSED_THRESHOLD);

mPreviousIsLeftEyeOpen = mFaceData.isLeftEyeOpen();

}

float rightOpenScore = face.getIsRightEyeOpenProbability();

if (rightOpenScore == Face.UNCOMPUTED_PROBABILITY) {

mFaceData.setRightEyeOpen(mPreviousIsRightEyeOpen);

} else {

mFaceData.setRightEyeOpen(rightOpenScore > EYE_CLOSED_THRESHOLD);

mPreviousIsRightEyeOpen = mFaceData.isRightEyeOpen();

}

// 2

// See if there's a smile!

// Determine if person is smiling.

final float SMILING_THRESHOLD = 0.8f;

mFaceData.setSmiling(face.getIsSmilingProbability() > SMILING_THRESHOLD);

mFaceGraphic.update(mFaceData);

}

Here are the changes:

-

FaceGraphicshould be responsible simply for drawing graphics over faces, not determining whether an eye is open or closed based on the face detector’s probability assessments. This means thatFaceTrackershould do those calculations and provideFaceGraphicwith ready-to-eat data in the form of aFaceDatainstance. These calculations take the results fromgetIsLeftEyeOpenProbabilityandgetIsRightEyeOpenProbabilityand turn them into a simpletrue/falsevalue. If the detector thinks that there’s a greater than 40% chance that an eye is open, it’s considered open. - You’ll do the same for smiling with

getIsSmilingProbability, but more strictly. If the detector thinks that there’s a greater than 80% chance that the face is smiling, it’s considered to be smiling.

Giving Faces the Cartoon Treatment

Now that you’re collecting landmarks and classifications, you can now overlay any tracked face with these cartoon features:

- Cartoon eyes over the real eyes, with each cartoon eye reflecting the real eye’s open/closed state

- A pig nose over the real nose

- A mustache

- If the tracked face is smiling, the cartoon irises in its cartoon eyes are rendered as smiling stars.

This requires the following changes to FaceGraphic’s draw method:

@Override

public void draw(Canvas canvas) {

final float DOT_RADIUS = 3.0f;

final float TEXT_OFFSET_Y = -30.0f;

// Confirm that the face and its features are still visible

// before drawing any graphics over it.

if (mFaceData == null) {

return;

}

PointF detectPosition = mFaceData.getPosition();

PointF detectLeftEyePosition = mFaceData.getLeftEyePosition();

PointF detectRightEyePosition = mFaceData.getRightEyePosition();

PointF detectNoseBasePosition = mFaceData.getNoseBasePosition();

PointF detectMouthLeftPosition = mFaceData.getMouthLeftPosition();

PointF detectMouthBottomPosition = mFaceData.getMouthBottomPosition();

PointF detectMouthRightPosition = mFaceData.getMouthRightPosition();

if ((detectPosition == null) ||

(detectLeftEyePosition == null) ||

(detectRightEyePosition == null) ||

(detectNoseBasePosition == null) ||

(detectMouthLeftPosition == null) ||

(detectMouthBottomPosition == null) ||

(detectMouthRightPosition == null)) {

return;

}

// Face position and dimensions

PointF position = new PointF(translateX(detectPosition.x),

translateY(detectPosition.y));

float width = scaleX(mFaceData.getWidth());

float height = scaleY(mFaceData.getHeight());

// Eye coordinates

PointF leftEyePosition = new PointF(translateX(detectLeftEyePosition.x),

translateY(detectLeftEyePosition.y));

PointF rightEyePosition = new PointF(translateX(detectRightEyePosition.x),

translateY(detectRightEyePosition.y));

// Eye state

boolean leftEyeOpen = mFaceData.isLeftEyeOpen();

boolean rightEyeOpen = mFaceData.isRightEyeOpen();

// Nose coordinates

PointF noseBasePosition = new PointF(translateX(detectNoseBasePosition.x),

translateY(detectNoseBasePosition.y));

// Mouth coordinates

PointF mouthLeftPosition = new PointF(translateX(detectMouthLeftPosition.x),

translateY(detectMouthLeftPosition.y));

PointF mouthRightPosition = new PointF(translateX(detectMouthRightPosition.x),

translateY(detectMouthRightPosition.y));

PointF mouthBottomPosition = new PointF(translateX(detectMouthBottomPosition.x),

translateY(detectMouthBottomPosition.y));

// Smile state

boolean smiling = mFaceData.isSmiling();

// Calculate the distance between the eyes using Pythagoras' formula,

// and we'll use that distance to set the size of the eyes and irises.

final float EYE_RADIUS_PROPORTION = 0.45f;

final float IRIS_RADIUS_PROPORTION = EYE_RADIUS_PROPORTION / 2.0f;

float distance = (float) Math.sqrt(

(rightEyePosition.x - leftEyePosition.x) * (rightEyePosition.x - leftEyePosition.x) +

(rightEyePosition.y - leftEyePosition.y) * (rightEyePosition.y - leftEyePosition.y));

float eyeRadius = EYE_RADIUS_PROPORTION * distance;

float irisRadius = IRIS_RADIUS_PROPORTION * distance;

// Draw the eyes.

drawEye(canvas, leftEyePosition, eyeRadius, leftEyePosition, irisRadius, leftEyeOpen, smiling);

drawEye(canvas, rightEyePosition, eyeRadius, rightEyePosition, irisRadius, rightEyeOpen, smiling);

// Draw the nose.

drawNose(canvas, noseBasePosition, leftEyePosition, rightEyePosition, width);

// Draw the mustache.

drawMustache(canvas, noseBasePosition, mouthLeftPosition, mouthRightPosition);

}

…and add the following methods to draw the eyes, nose, and mustache:

private void drawEye(Canvas canvas,

PointF eyePosition, float eyeRadius,

PointF irisPosition, float irisRadius,

boolean eyeOpen, boolean smiling) {

if (eyeOpen) {

canvas.drawCircle(eyePosition.x, eyePosition.y, eyeRadius, mEyeWhitePaint);

if (smiling) {

mHappyStarGraphic.setBounds(

(int)(irisPosition.x - irisRadius),

(int)(irisPosition.y - irisRadius),

(int)(irisPosition.x + irisRadius),

(int)(irisPosition.y + irisRadius));

mHappyStarGraphic.draw(canvas);

} else {

canvas.drawCircle(irisPosition.x, irisPosition.y, irisRadius, mIrisPaint);

}

} else {

canvas.drawCircle(eyePosition.x, eyePosition.y, eyeRadius, mEyelidPaint);

float y = eyePosition.y;

float start = eyePosition.x - eyeRadius;

float end = eyePosition.x + eyeRadius;

canvas.drawLine(start, y, end, y, mEyeOutlinePaint);

}

canvas.drawCircle(eyePosition.x, eyePosition.y, eyeRadius, mEyeOutlinePaint);

}

private void drawNose(Canvas canvas,

PointF noseBasePosition,

PointF leftEyePosition, PointF rightEyePosition,

float faceWidth) {

final float NOSE_FACE_WIDTH_RATIO = (float)(1 / 5.0);

float noseWidth = faceWidth * NOSE_FACE_WIDTH_RATIO;

int left = (int)(noseBasePosition.x - (noseWidth / 2));

int right = (int)(noseBasePosition.x + (noseWidth / 2));

int top = (int)(leftEyePosition.y + rightEyePosition.y) / 2;

int bottom = (int)noseBasePosition.y;

mPigNoseGraphic.setBounds(left, top, right, bottom);

mPigNoseGraphic.draw(canvas);

}

private void drawMustache(Canvas canvas,

PointF noseBasePosition,

PointF mouthLeftPosition, PointF mouthRightPosition) {

int left = (int)mouthLeftPosition.x;

int top = (int)noseBasePosition.y;

int right = (int)mouthRightPosition.x;

int bottom = (int)Math.min(mouthLeftPosition.y, mouthRightPosition.y);

if (mIsFrontFacing) {

mMustacheGraphic.setBounds(left, top, right, bottom);

} else {

mMustacheGraphic.setBounds(right, top, left, bottom);

}

mMustacheGraphic.draw(canvas);

}

Run the app and start pointing the camera at faces. For non-smiling faces with both eyes open, you should see something like this:

This one’s of me winking with my right eye (hence it’s closed) and smiling (which is why my iris is a smiling star):

The app will draw cartoon features over a small number of faces simultaneously…

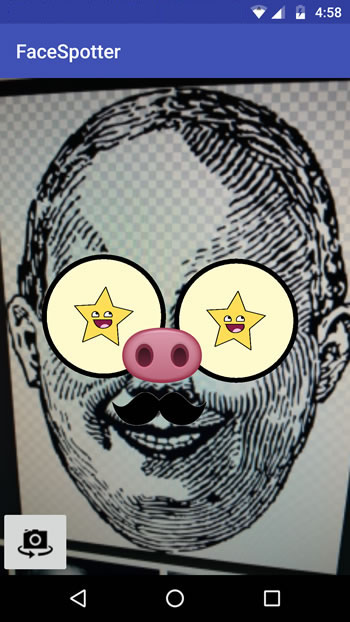

…and even over faces in illustrations if they’re realistic enough:

It’s a lot more like Snapchat now!