Augmented Reality in Android with Google’s Face API

You’ll build a Snapchat Lens-like app called FaceSpotter which draws cartoony features over faces in a camera feed using augmented reality. By Joey deVilla.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Augmented Reality in Android with Google’s Face API

30 mins

Finding Faces

First you add a view into the overlay to draw detected face data.

Open FaceGraphic.java. You may have noticed the declaration for the instance variable mFace is marked with the keyword volatile. mFace stores face data sent from FaceTracker, and may be written to by many threads. Marking it as volatile guarantees that you always get the result of the latest “write” any time you read its value. This is important since face data will change very quickly.

Delete the existing draw() and add the following to FaceGraphic:

// 1

void update(Face face) {

mFace = face;

postInvalidate(); // Trigger a redraw of the graphic (i.e. cause draw() to be called).

}

@Override

public void draw(Canvas canvas) {

// 2

// Confirm that the face and its features are still visible

// before drawing any graphics over it.

Face face = mFace;

if (face == null) {

return;

}

// 3

float centerX = translateX(face.getPosition().x + face.getWidth() / 2.0f);

float centerY = translateY(face.getPosition().y + face.getHeight() / 2.0f);

float offsetX = scaleX(face.getWidth() / 2.0f);

float offsetY = scaleY(face.getHeight() / 2.0f);

// 4

// Draw a box around the face.

float left = centerX - offsetX;

float right = centerX + offsetX;

float top = centerY - offsetY;

float bottom = centerY + offsetY;

// 5

canvas.drawRect(left, top, right, bottom, mHintOutlinePaint);

// 6

// Draw the face's id.

canvas.drawText(String.format("id: %d", face.getId()), centerX, centerY, mHintTextPaint);

}

Here’s what that code does:

- When a

FaceTrackerinstance gets an update on a tracked face, it calls its correspondingFaceGraphicinstance’supdatemethod and passes it information about that face. The method saves that information inmFaceand then callsFaceGraphic’s parent class’postInvalidatemethod, which forces the graphic to redraw. - Before attempting to draw a box around the face, the

drawmethod checks to see if the face is still being tracked. If it is,mFacewill be non-null. - The x- and y-coordinates of the center of the face are calculated.

FaceTrackerprovides camera coordinates, but you’re drawing toFaceGraphic’s view coordinates, so you useGraphicOverlay’stranslateXandtranslateYmethods to convertmFace’s camera coordinates to the view coordinates of the canvas. - Calculate the x-offsets for the left and right sides of the box and the y-offsets for the top and bottom. The difference between the camera’s and the view’s coordinate systems require you convert the face’s width and height using

GraphicOverlay’sscaleXandscaleYmethods. - Draw the box around the face using the calculated center coordinates and offsets.

- Draw the face’s

idusing the face’s center point as the starting coordinates.

The face detector in FaceActivity sends information about faces it detects in the camera’s data stream to its assigned multiprocessor. For each detected face, the multiprocessor spawns a new FaceTracker instance.

Add the following methods to FaceTracker.java after the constructor:

// 1

@Override

public void onNewItem(int id, Face face) {

mFaceGraphic = new FaceGraphic(mOverlay, mContext, mIsFrontFacing);

}

// 2

@Override

public void onUpdate(FaceDetector.Detections<Face> detectionResults, Face face) {

mOverlay.add(mFaceGraphic);

mFaceGraphic.update(face);

}

// 3

@Override

public void onMissing(FaceDetector.Detections<Face> detectionResults) {

mOverlay.remove(mFaceGraphic);

}

@Override

public void onDone() {

mOverlay.remove(mFaceGraphic);

}

Here’s what each method does:

-

onNewItem: Called when a new

Faceis detected and its tracking begins. You’re using it to create a new instance ofFaceGraphic, which makes sense: when a new face is detected, you want to create new AR images to draw over it. -

onUpdate: Called when some property (position, angle, or state) of a tracked face changes. You’re using it to add the

FaceGraphicinstance to theGraphicOverlayand then callFaceGraphic’supdatemethod, which passes along the tracked face’s data. -

onMissing and onDone: Called when a tracked face is assumed to be temporarily and permanently gone, respectively. Both remove the

FaceGraphicinstance from the overlay.

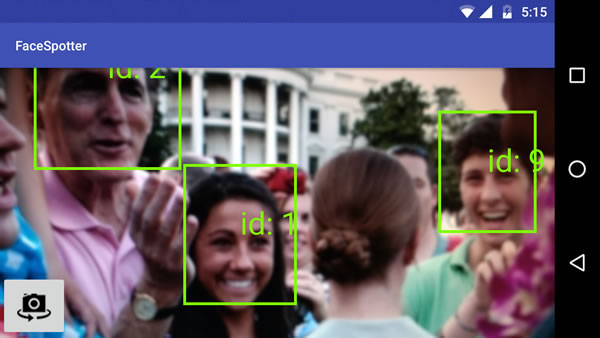

Run the app. It will draw a box around each face it detects, along with the corresponding ID number:

Landmarks Ahoy!

The Face API can identify the facial landmarks shown below.

You’ll modify the app so that it identifies the following for any tracked face:

- left eye

- right eye

- nose base

- mouth left

- mouth bottom

- mouth right

This information will be saved in a FaceData object, instead of the provided Face object.

For facial landmarks, “left” and “right” refer to the subject’s left and right. Viewed through the front camera, the subject’s right eye will be closer to the right side of the screen, but through the rear camera, it’ll be closer to the left.

Open FaceTracker.java and modify onUpdate() as shown below. The call to update() will momentarily cause a build error while you are in the process of modifying the app to use the FaceData model and you will fix it soon.

@Override

public void onUpdate(FaceDetector.Detections detectionResults, Face face) {

mOverlay.add(mFaceGraphic);

// Get face dimensions.

mFaceData.setPosition(face.getPosition());

mFaceData.setWidth(face.getWidth());

mFaceData.setHeight(face.getHeight());

// Get the positions of facial landmarks.

updatePreviousLandmarkPositions(face);

mFaceData.setLeftEyePosition(getLandmarkPosition(face, Landmark.LEFT_EYE));

mFaceData.setRightEyePosition(getLandmarkPosition(face, Landmark.RIGHT_EYE));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.LEFT_CHEEK));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.RIGHT_CHEEK));

mFaceData.setNoseBasePosition(getLandmarkPosition(face, Landmark.NOSE_BASE));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.LEFT_EAR));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.LEFT_EAR_TIP));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.RIGHT_EAR));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.RIGHT_EAR_TIP));

mFaceData.setMouthLeftPosition(getLandmarkPosition(face, Landmark.LEFT_MOUTH));

mFaceData.setMouthBottomPosition(getLandmarkPosition(face, Landmark.BOTTOM_MOUTH));

mFaceData.setMouthRightPosition(getLandmarkPosition(face, Landmark.RIGHT_MOUTH));

mFaceGraphic.update(mFaceData);

}

Note that you’re now passing a FaceData instance to FaceGraphic’s update method instead of the Face instance that the onUpdate method receives.

This allows you to specify the face information passed to FaceTracker, which in turn lets you use some math trickery based on the last known locations of facial landmarks when the faces are moving too quickly to approximate their current locations. You use mPreviousLandmarkPositions and the getLandmarkPosition and updatePreviousLandmarkPositions methods for this purpose.

Now open FaceGraphic.java.

First, since it’s now receiving a FaceData value instead of a Face value from FaceTracker, you need to change a key instance variable declaration from:

private volatile Face mFace;

to:

private volatile FaceData mFaceData;

Modify update() to account for this change:

void update(FaceData faceData) {

mFaceData = faceData;

postInvalidate(); // Trigger a redraw of the graphic (i.e. cause draw() to be called).

}

And finally, you need to update draw() to draw dots over the landmarks of any tracked face, and identifying text over those dots:

@Override

public void draw(Canvas canvas) {

final float DOT_RADIUS = 3.0f;

final float TEXT_OFFSET_Y = -30.0f;

// Confirm that the face and its features are still visible before drawing any graphics over it.

if (mFaceData == null) {

return;

}

// 1

PointF detectPosition = mFaceData.getPosition();

PointF detectLeftEyePosition = mFaceData.getLeftEyePosition();

PointF detectRightEyePosition = mFaceData.getRightEyePosition();

PointF detectNoseBasePosition = mFaceData.getNoseBasePosition();

PointF detectMouthLeftPosition = mFaceData.getMouthLeftPosition();

PointF detectMouthBottomPosition = mFaceData.getMouthBottomPosition();

PointF detectMouthRightPosition = mFaceData.getMouthRightPosition();

if ((detectPosition == null) ||

(detectLeftEyePosition == null) ||

(detectRightEyePosition == null) ||

(detectNoseBasePosition == null) ||

(detectMouthLeftPosition == null) ||

(detectMouthBottomPosition == null) ||

(detectMouthRightPosition == null)) {

return;

}

// 2

float leftEyeX = translateX(detectLeftEyePosition.x);

float leftEyeY = translateY(detectLeftEyePosition.y);

canvas.drawCircle(leftEyeX, leftEyeY, DOT_RADIUS, mHintOutlinePaint);

canvas.drawText("left eye", leftEyeX, leftEyeY + TEXT_OFFSET_Y, mHintTextPaint);

float rightEyeX = translateX(detectRightEyePosition.x);

float rightEyeY = translateY(detectRightEyePosition.y);

canvas.drawCircle(rightEyeX, rightEyeY, DOT_RADIUS, mHintOutlinePaint);

canvas.drawText("right eye", rightEyeX, rightEyeY + TEXT_OFFSET_Y, mHintTextPaint);

float noseBaseX = translateX(detectNoseBasePosition.x);

float noseBaseY = translateY(detectNoseBasePosition.y);

canvas.drawCircle(noseBaseX, noseBaseY, DOT_RADIUS, mHintOutlinePaint);

canvas.drawText("nose base", noseBaseX, noseBaseY + TEXT_OFFSET_Y, mHintTextPaint);

float mouthLeftX = translateX(detectMouthLeftPosition.x);

float mouthLeftY = translateY(detectMouthLeftPosition.y);

canvas.drawCircle(mouthLeftX, mouthLeftY, DOT_RADIUS, mHintOutlinePaint);

canvas.drawText("mouth left", mouthLeftX, mouthLeftY + TEXT_OFFSET_Y, mHintTextPaint);

float mouthRightX = translateX(detectMouthRightPosition.x);

float mouthRightY = translateY(detectMouthRightPosition.y);

canvas.drawCircle(mouthRightX, mouthRightY, DOT_RADIUS, mHintOutlinePaint);

canvas.drawText("mouth right", mouthRightX, mouthRightY + TEXT_OFFSET_Y, mHintTextPaint);

float mouthBottomX = translateX(detectMouthBottomPosition.x);

float mouthBottomY = translateY(detectMouthBottomPosition.y);

canvas.drawCircle(mouthBottomX, mouthBottomY, DOT_RADIUS, mHintOutlinePaint);

canvas.drawText("mouth bottom", mouthBottomX, mouthBottomY + TEXT_OFFSET_Y, mHintTextPaint);

}

Here’s what you should note about this revised method:

- Because face data will change very quickly, these checks are necessary to confirm that any objects that you extract from

mFaceDataare notnullbefore using their data. Without these checks, the app will crash. - This part, which verbose, is fairly straightforward: it extracts the coordinates for each landmark and uses them to draw dots and identify text over the appropriate locations on the tracked face.

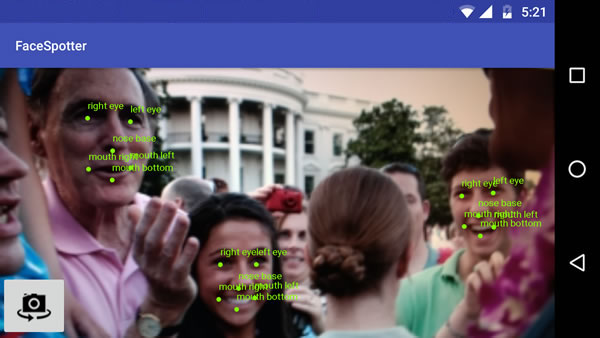

Run the app. You should get results similar to this…

…or with multiple faces, results like this:

Now that you can identify landmarks on faces, you can start drawing cartoon features over them! But first, let’s talk about facial classifications.