Tesseract OCR Tutorial for iOS

In this tutorial, you’ll learn how to read and manipulate text extracted from images using OCR by Tesseract. By Lyndsey Scott.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Tesseract OCR Tutorial for iOS

25 mins

- Getting Started

- Tesseract’s Limitations

- Adding the Tesseract Framework

- How Tesseract OCR Works

- Adding Trained Data

- Loading the Image

- Implementing Tesseract OCR

- Processing Your First Image

- Scaling Images While Preserving Aspect Ratio

- Improving OCR Accuracy

- Improving Image Quality

- Where to Go From Here?

Scaling Images While Preserving Aspect Ratio

The aspect ratio of an image is the proportional relationship between its width and height. Mathematically speaking, to reduce the size of the original image without affecting the aspect ratio, you must keep the width-to-height ratio constant.

When you know both the height and the width of the original image, and you know either the desired height or width of the final image, you can rearrange the aspect ratio equation as follows:

This results in the two formulas.

Formula 1: When the image’s width is greater than its height.

Height1/Width1 * width2 = height2

Formula 2: When the image’s height is greater than its width.

Width1/Height1 * height2 = width2

Now, add the following extension and method to the bottom of ViewController.swift:

// MARK: - UIImage extension

//1

extension UIImage {

// 2

func scaledImage(_ maxDimension: CGFloat) -> UIImage? {

// 3

var scaledSize = CGSize(width: maxDimension, height: maxDimension)

// 4

if size.width > size.height {

scaledSize.height = size.height / size.width * scaledSize.width

} else {

scaledSize.width = size.width / size.height * scaledSize.height

}

// 5

UIGraphicsBeginImageContext(scaledSize)

draw(in: CGRect(origin: .zero, size: scaledSize))

let scaledImage = UIGraphicsGetImageFromCurrentImageContext()

UIGraphicsEndImageContext()

// 6

return scaledImage

}

}

This code does the following:

- The

UIImageextension allows you to access any method(s) it contains directly through aUIImageobject. -

scaledImage(_:)takes in the maximum dimension (height or width) you desire for the returned image. - The

scaledSizevariable initially holds aCGSizewith height and width equal tomaxDimension. - You calculate the smaller dimension of the

UIImagesuch thatscaledSizeretains the image’s aspect ratio. If the image’s width is greater than its height, use Formula 1. Otherwise, use Formula 2. - Create an image context, draw the original image into a rectangle of size

scaledSize, then get the image from that context before ending the context. - Return the resulting image.

Whew! </math>

Now, within the top of performImageRecognition(_:), include:

let scaledImage = image.scaledImage(1000) ?? image

This will attempt to scale the image so that it’s no bigger than 1,000 points wide or long. If scaledImage() fails to return a scaled image, the constant will default to the original image.

Then, replace tesseract.image = image with:

tesseract.image = scaledImage

This assigns the scaled image to the Tesseract object instead.

Build, run and select the poem again from the photo library.

Much better. :]

But chances are that your results aren’t perfect. There’s still room for improvement…

Improving OCR Accuracy

“Garbage In, Garbage Out.” The easiest way to improve the quality of the output is to improve the quality of the input. As Google lists on their Tesseract OCR site, dark or uneven lighting, image noise, skewed text orientation and thick dark image borders can all contribute to less-than-perfect results.

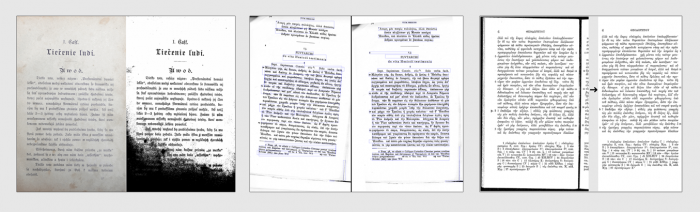

Examples of potentially problematic image inputs that can be corrected for improved results. Source: Google’s Tesseract OCR site.

Next, you’ll improve the image’s quality.

Improving Image Quality

The Tesseract iOS framework used to have built-in methods to improve image quality, but these methods have since been deprecated and the framework’s documentation now recommends using Brad Larson’s GPUImage framework instead.

GPUImage is available via CocoaPods, so immediately below pod 'TesseractOCRiOS' in Podfile, add:

pod 'GPUImage'

Then, in Terminal, re-run:

pod install

This should now make GPUImage available in your project.

Back in ViewController.swift, add the following below import TesseractOCR to make GPUImage available in the class:

import GPUImage

Directly below scaledImage(_:), also within the UIImage extension, add:

func preprocessedImage() -> UIImage? {

// 1

let stillImageFilter = GPUImageAdaptiveThresholdFilter()

// 2

stillImageFilter.blurRadiusInPixels = 15.0

// 3

let filteredImage = stillImageFilter.image(byFilteringImage: self)

// 4

return filteredImage

}

Here, you:

- Initialize a GPUImageAdaptiveThresholdFilter. The GPUImageAdaptiveThresholdFilter “determines the local luminance around a pixel, then turns the pixel black if it is below that local luminance, and white if above. This can be useful for picking out text under varying lighting conditions.”

- The blurRadius represents the average blur of each character in pixels. It defaults to 4.0, but you can play around with this value to potentially improve OCR results. In the code above, you set it to 15.0 since the average character blur appears to be around 15.0 pixels wide when the image is 1,000 points wide.

- Run the image through the filter to optionally return a new image object.

- If the filter returns an image, return that image.

Back in performImageRecognition(_:), immediately underneath the scaledImage constant instantiation, add:

let preprocessedImage = scaledImage.preprocessedImage() ?? scaledImage

This code attempts to run the scaledImage through the GPUImage filter, but defaults to using the non-filtered scaledImage if preprocessedImage()’s filter fails.

Then, replace tesseract.image = scaledImage with:

tesseract.image = preprocessedImage

This asks Tesseract to process the scaled and filtered image instead.

Now that you’ve gotten all of that out of the way, build, run and select the image again.

Voilà! Hopefully, your results are now either perfect or closer-to-perfect than before.

But if the apple of your eye isn’t named “Lenore,” he or she probably won’t appreciate this poem coming from you as it stands… and they’ll likely want to know who this “Lenore” character is! ;]

Replace “Lenore” with the name of your beloved and… presto chango! You’ve created a love poem tailored to your sweetheart and your sweetheart alone.

That’s it! Your Love In A Snap app is complete — and sure to win over the heart of the one you adore.

Or if you’re anything like me, you’ll replace Lenore’s name with your own, send that poem to your inbox through a burner account, stay in for the evening, order in some Bibimbap, have a glass of wine, get a bit bleary-eyed, then pretend that email you received is from the Queen of England for an especially classy and sophisticated evening full of romance, mystery and intrigue. But maybe that’s just me…

Where to Go From Here?

Use the Download Materials button at the top or bottom of this tutorial to download the project if you haven’t already, then check out the project in Love In A Snap Final.

Try out the app with other text to see how the OCR results vary between sources and download more language data as needed.

You can also train Tesseract to further improve its output. After all, if you’re capable of deciphering characters using your eyes or ears or even fingertips, you’re a certifiable expert at character recognition already and are fully capable of teaching your computer so much more than it already knows.

As always, if you have comments or questions on this tutorial, Tesseract or OCR strategies, feel free to join the discussion below!