Kubernetes Tutorial for Swift on the Server

In this tutorial, you’ll learn how to use Kubernetes to deploy a Kitura server that’s resilient, with crash recovery and replicas. You’ll start by using the kubectl CLI, then use Helm to combine it all into one command. By David Okun.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Kubernetes Tutorial for Swift on the Server

35 mins

- Cloud Native Development and Swift

- Cloud Native Computing Foundation

- Swift and Kubernetes

- Getting Started

- Installing Docker and Kubernetes

- Enabling Kubernetes in Docker

- Creating Your RazeKube

- Installing the Kitura CLI

- Running RazeKube

- Crashing Your RazeKube

- Kubernetes and the State Machine

- Building and Running Your RazeKube Docker Image

- Tagging Your RazeKube Docker Image

- Deploying RazeKube to Kubernetes

- Creating a RazeKube Service

- Recovering From a Crash

- Deploying Replicas

- Cleaning Up

- Helm: The Kubernetes Package Manager

- What’s in a Chart?

- Setting Up Helm and Tiller

- Deploying RazeKube With Helm

- Behold Your Charted RazeKube!

- Where to Go From Here?

As you learn more about developing apps on the server-side, you’ll encounter multiple situations where you require tooling to handle processes outside of your source code. You’ll need to handle things like:

- Deployment

- Dependency management

- Logging

- Performance monitoring

While Swift on the server continues to mature, you can draw from a collection of tools that are considered “Cloud Native” to accomplish these things with a Swift API!

In this tutorial, you’ll:

- Use Kubernetes to deploy and keep your app in flight.

- Use Kubernetes to replicate your app for high availability.

- Use Helm to combine all of the previous work you did with Kubernetes into one command.

This tutorial will use Kitura to build the API you will be working with, which is called RazeKube. Behold — the KUBE!

While the Kube is many things (all seeing, all knowing), there is one thing that the Kube isn’t: Resilient! You are going to use Cloud Native tools to make it so!

In this tutorial, you will use the following:

- Kitura 2.7, or higher

- macOS 10.14, or higher

- Swift 5.0, or higher

- Xcode 10.2, or higher

- Terminal

Cloud Native Development and Swift

Here’s a short intro about what the Swift Server work group (SSWG) is working on and what Cloud Native Development entails.

Swift on the server draws motivation from many different ecosystems for inspiration. Vapor, Kitura and Perfect all draw their architecture from different projects in different programming languages. The concept of Futures and Promises in SwiftNIO isn’t native to Swift, although SwiftNIO is developed with a goal in mind to be a library that stands tall as a standard on its own.

A group of people who comprise the Swift Server work group meet bi-weekly to discuss advancements in the ecosystem. They have a few goals, but this one sticks out as relevant to this tutorial:

Regardless of how you may feel about adopting third-party libraries, the concept of reducing repeated code is important and worth pursuing.

Cloud Native Computing Foundation

Another collective in pursuit of this goal is the Cloud Native Computing Foundation (CNCF). The primary charter of the CNCF is as follows:

Swift and Kubernetes

Swift developers who have focused their efforts on mobile devices haven’t worried too much about standardization with other platforms. Apple has a reputation for forming iOS-centric guidelines for design, and there are a number of tools to accomplish similar goals, but all on one ecosystem — iOS.

In the world of server computing, multiple languages can solve almost any problem. There’s plenty of times when one programming language makes more sense than another. Rather than get into a “holy-war” discussion about when Swift makes more sense than other languages, you’ll focus on using Swift as a means to an end. You’ll get a taste of the tools that can help you solve problems while you do it!

Kubernetes is the first tool you’re going to use. While Kubernetes is an important tool for deployment in current-day server-side development, it serves a number of other purposes as well, and you’ll explore those in this tutorial! You’ll dive right in after you make sure your app is working the way it needs to.

Getting Started

Click the Download Materials button at the top or bottom of this tutorial to get the project files you’ll use to build the sample Kitura app.

Next, you need to install Docker for desktop and the Kitura CLI to proceed with this tutorial.

- Audrey Tam has written an absolutely brilliant tutorial about how to use Docker here. Docker is going to be discussed in this tutorial as a building block for other components, and I recommend giving Audrey’s tutorial a read before proceeding. She has also written a tutorial on how to deploy a Kitura app with Kubernetes here, which is also worth a read to familiarize yourself with some of the basic concepts used here too!

- Docker for Desktop seems to be the ideal way to use Kubernetes on your Mac lately, but you do have alternatives! You can try MiniKube or another cloud provider to set up an online Kubernetes service, but Docker for desktop includes support for Kubernetes as well as other things that will prove helpful throughout this tutorial.

Installing Docker and Kubernetes

If you’ve already installed Docker, start it up, then skip down to the next section: Enabling Kubernetes in Docker.

In a web browser, open https://www.docker.com/products/docker-desktop, and click the Download Desktop for Mac and Windows button:

On the next page, sign in or create a free Docker Hub account, if you don’t already have one. Then, proceed to download the installer by clicking the Download Docker Desktop for Mac button:

You’ll download a file called Docker.dmg. Once downloaded, double-click the file. You’ll see a dialogue that wants you to drag the Docker Whale into your applications folder.

When the installer appears, you will have to allow privileged access for your Mac. Make sure you install the Stable version — previous versions of Docker for desktop only included Kubernetes on the Edge version.

Enabling Kubernetes in Docker

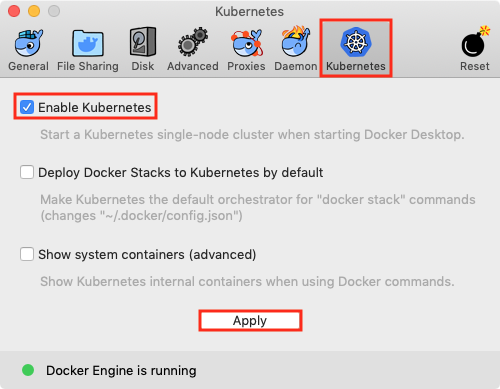

Once your installation has finished, open the Docker whale menu in the top toolbar of your Mac, and select Preferences. In the Kubernetes tab, check Enable Kubernetes, then click Apply:

You might have to restart Docker for this change to take effect. If you do, open Preferences again, and make sure the bottom of the window says that both Docker and Kubernetes are running.

To double-check that Docker is installed, open Terminal, and enter docker version — you should see output like this:

Client: Docker Engine - Community

Version: 18.09.2

API version: 1.39

Go version: go1.10.8

Git commit: 6247962

Built: Sun Feb 10 04:12:39 2019

OS/Arch: darwin/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 18.09.2

API version: 1.39 (minimum version 1.12)

Go version: go1.10.6

Git commit: 6247962

Built: Sun Feb 10 04:13:06 2019

OS/Arch: linux/amd64

Experimental: false

Additionally, to ensure Kubernetes is running, enter kubectl get all, and you should see that one service is running:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16h