Swift Tutorial Part 2: Types and Operations

Welcome to the second part of this learning Swift mini-series, where you’ll learn to use strings, type conversion, type inference and tuples. By Lorenzo Boaro.

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Sign up/Sign in

With a free Kodeco account you can download source code, track your progress, bookmark, personalise your learner profile and more!

Create accountAlready a member of Kodeco? Sign in

Contents

Swift Tutorial Part 2: Types and Operations

30 mins

Welcome to the second part of this mini-series on learning Swift!

This part carries on from Part 1: Expressions, Variables & Constants. We recommend you start with the first part of this tutorial series to get the most out of it.

It’s time to learn more about types! Formally, a type describes a set of values and the operations that can be performed on them.

In this tutorial, you’ll learn about handling different types that are in the Swift programming language. You’ll learn about converting between types, and you’ll also be introduced to type inference, which makes your life as a programmer a lot simpler.

Finally, you’ll learn about tuples, which allow you to make your own types made up of multiple values of any type.

Getting Started

Sometimes, you’ll have data in one format and need to convert it to another. The naïve way to attempt this would be like so:

var integer: Int = 100

var decimal: Double = 12.5

integer = decimal

Swift will complain if you try to do this and spit out an error on the third line:

Cannot assign value of type 'Double' to type 'Int'

Some programming languages aren’t as strict and will perform conversions like this automatically. Experience shows this kind of automatic conversion is the source of software bugs, and it often hurts performance. Swift prevents you from assigning a value of one type to another and this avoids these issues.

Remember, computers rely on programmers to tell them what to do. In Swift, that includes being explicit about type conversions. If you want the conversion to happen, you have to say so!

Instead of simply assigning, you need to explicitly write that you want to convert the type. You do it like so:

var integer: Int = 100

var decimal: Double = 12.5

integer = Int(decimal)

The assignment on the third line now tells Swift, unequivocally, that you want to convert from the original type, Double, to the new type, Int.

integer variable ends up with the value 12 instead of 12.5. This is why it’s important to be explicit. Swift wants to make sure that you know what you’re doing and that you may end up losing data by performing the type conversion.

Operators With Mixed Types

So far, you’ve only seen operators acting independently on integers or doubles. But what if you have an integer that you want to multiply by a double?

You might think you could do it like this:

let hourlyRate: Double = 19.5

let hoursWorked: Int = 10

let totalCost: Double = hourlyRate * hoursWorked

If you try that, you’ll get an error on the final line:

Binary operator '*' cannot be applied to operands of type 'Double' and 'Int'

This is because, in Swift, you can’t apply the * operator to mixed types. This rule also applies to the other arithmetic operators. It may seem surprising at first, but Swift is being rather helpful.

Swift forces you to be explicit about what you mean when you want an Int multiplied by a Double, because the result can be only one type. Do you want the result to be an Int, converting the Double to an Int before performing the multiplication? Or do you want the result to be a Double, converting the Int to a Double before performing the multiplication?

In this example, you want the result to be a Double. You don’t want an Int, because, in that case, Swift would convert the hourlyRate constant into an Int to perform the multiplication, rounding it down to 19 and losing the precision of the Double.

You need to tell Swift you want it to consider the hoursWorked constant to be a Double, like so:

let hourlyRate: Double = 19.5

let hoursWorked: Int = 10

let totalCost: Double = hourlyRate * Double(hoursWorked)

Now, each of the operands will be a Double when Swift multiplies them, so totalCost is a Double as well.

Type Inference

Up to this point, each time you’ve seen a variable or constant declared, it’s been accompanied by an associated type, like this:

let integer: Int = 42

let double: Double = 3.14159

You may be asking yourself: “Why must I write the : Int and : Double, when the right-hand side of the assignment is already an Int or a Double?” It’s redundant, to be sure; you can see this without too much work.

It turns out that the Swift compiler can deduce this as well. It doesn’t need you to tell it the type all the time — it can figure it out on its own. This is done through a process called type inference. Not all programming languages have this, but Swift does, and it’s a key component of Swift’s power as a language.

So you can simply drop the type in most places where you see one.

For example, consider the following constant declaration:

let typeInferredInt = 42

Sometimes, it’s useful to check the inferred type of a variable or constant. You can do this in a playground by holding down the Option key and clicking on the variable’s or constant’s name. Xcode will display a popover like this:

Xcode tells you the inferred type by giving you the declaration that you would have had to use if there were no type inference. In this case, the type is Int.

It works for other types, too:

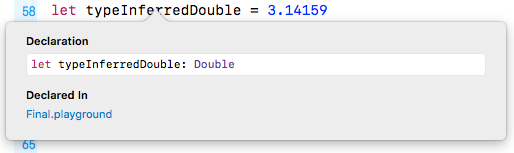

let typeInferredDouble = 3.14159

Option-clicking on this reveals the following:

Type inference isn’t magic. Swift is simply doing what your brain does very easily. Programming languages that don’t use type inference can often feel verbose, because you need to specify the often obvious type each time you declare a variable or constant.

Sometimes, you want to define a constant or variable and ensure it’s a certain type, even though what you’re assigning to it is a different type. You saw earlier how you can convert from one type to another. For example, consider the following:

let wantADouble = 3

Here, Swift infers the type of wantADouble as Int. But what if you wanted Double instead?

What you could do is the following:

let actuallyDouble = Double(3)

This is the same as you saw before with type conversion.

Another option would be not to use type inference at all and do the following:

let actuallyDouble: Double = 3

There is a third option, like so:

let actuallyDouble = 3 as Double

This uses a new keyword you haven’t seen before, as, which also performs a type conversion.

A literal number value that doesn’t contain a decimal point can also be used as an Int and as a Double. This is why you’re allowed to assign the value 3 to constant actuallyDouble.

Literal number values that do contain a decimal point cannot be integers. This means you could have avoided this entire discussion had you started with:

let wantADouble = 3.0

Sorry! (Not sorry!) :]

3 don’t have a type. It’s only when using them in an expression, or assigning them to a constant or variable, that Swift infers a type for them.